The Influence of Parametrised Tasks on Learners’ Judgement Accuracy – A Secondary Analysis

EARLI 2025 - August 26th, 2025 - Graz, Austria

Karlsruhe University of Education, Germany

Relevance

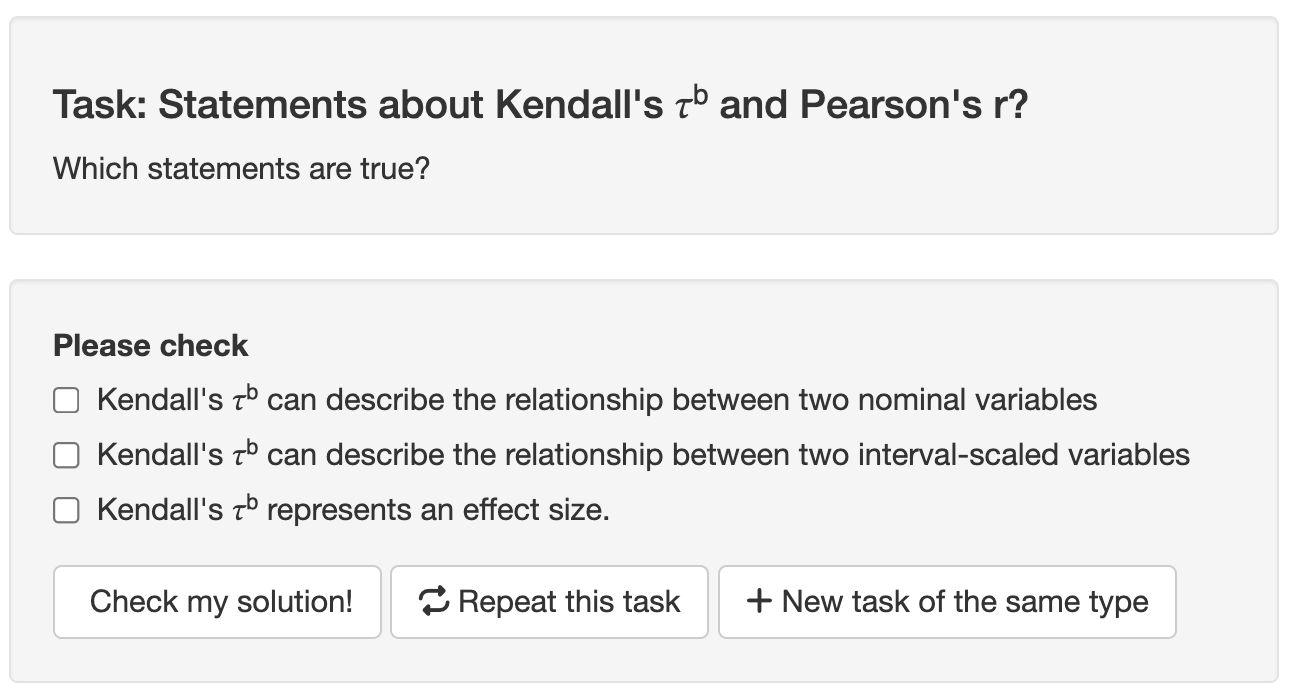

Parametrised tasks are tasks with varying parameters (e.g., Michael, 2021)

- non-parametrised task can be repeated

- parametrised tasks can be repeated & generate a new task

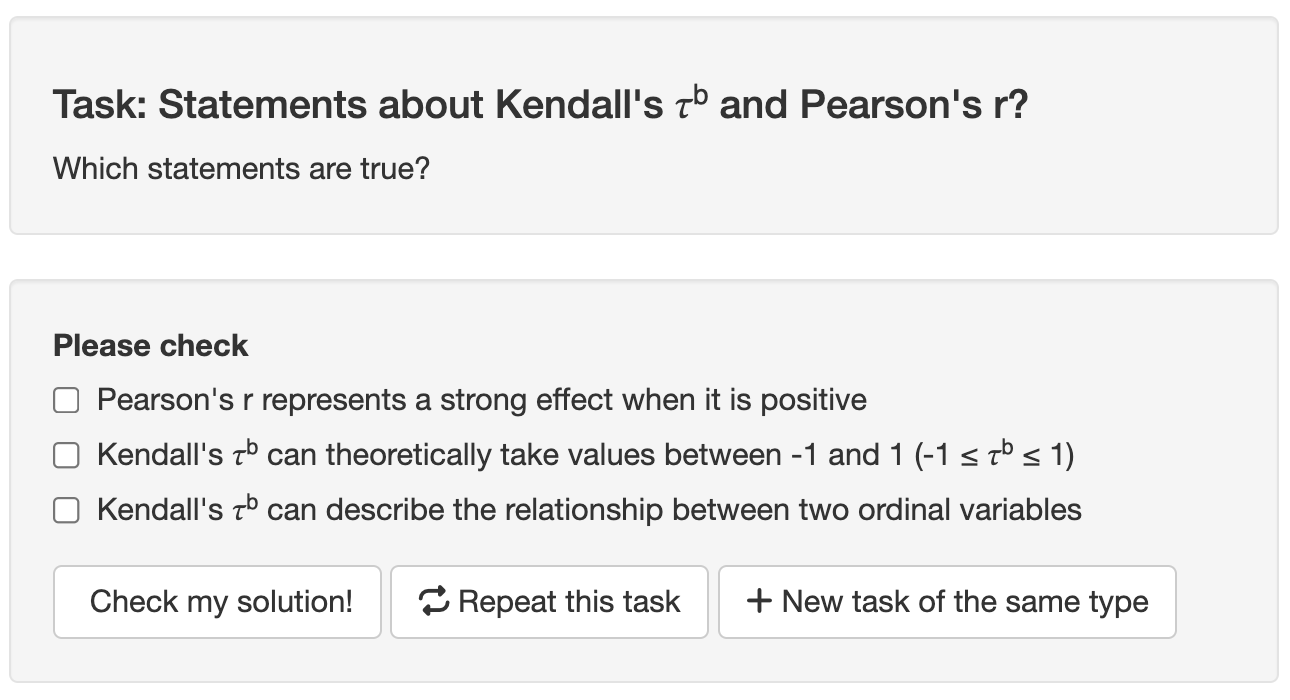

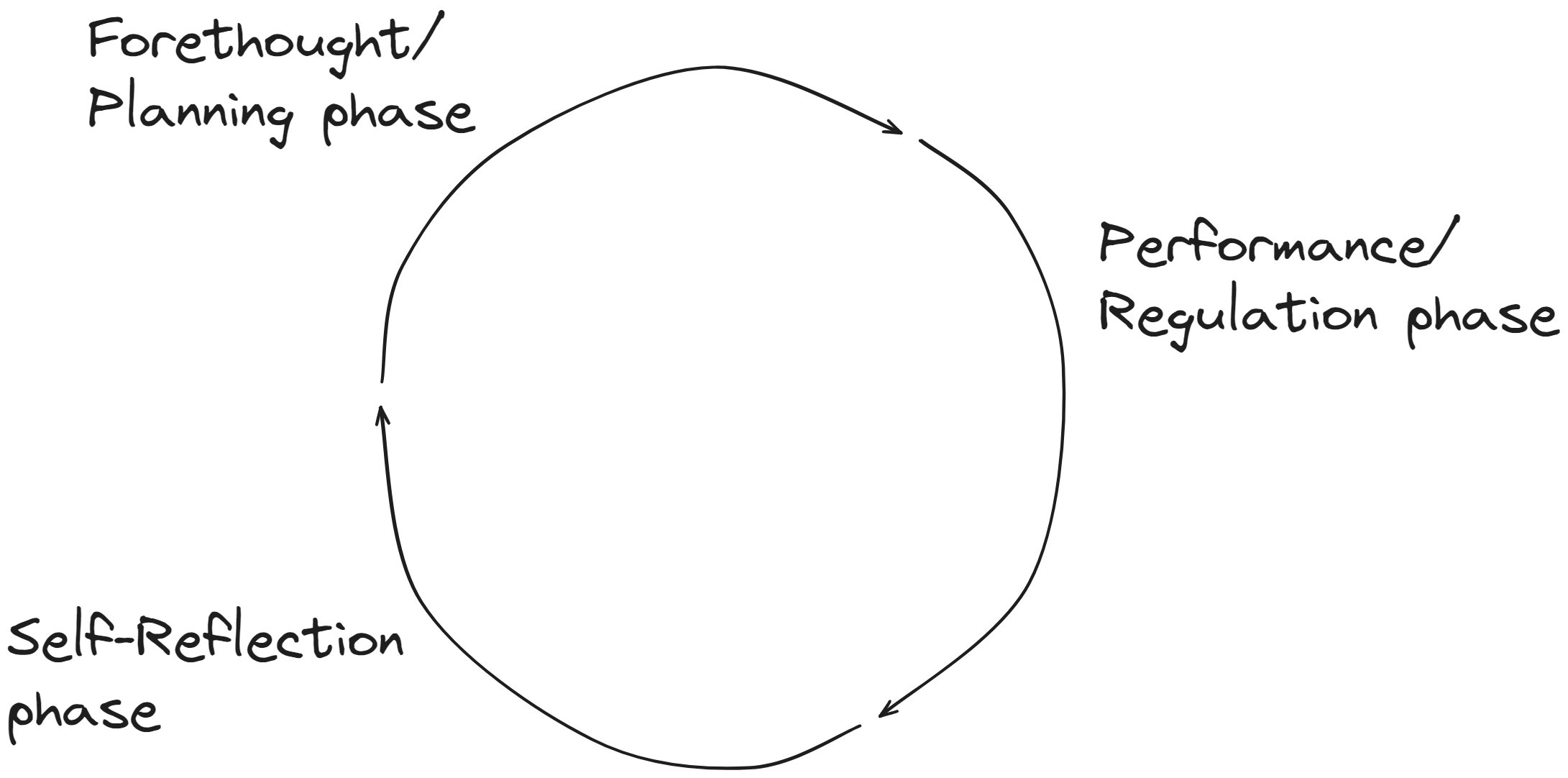

SRL - cyclical model by Zimmerman (2000)

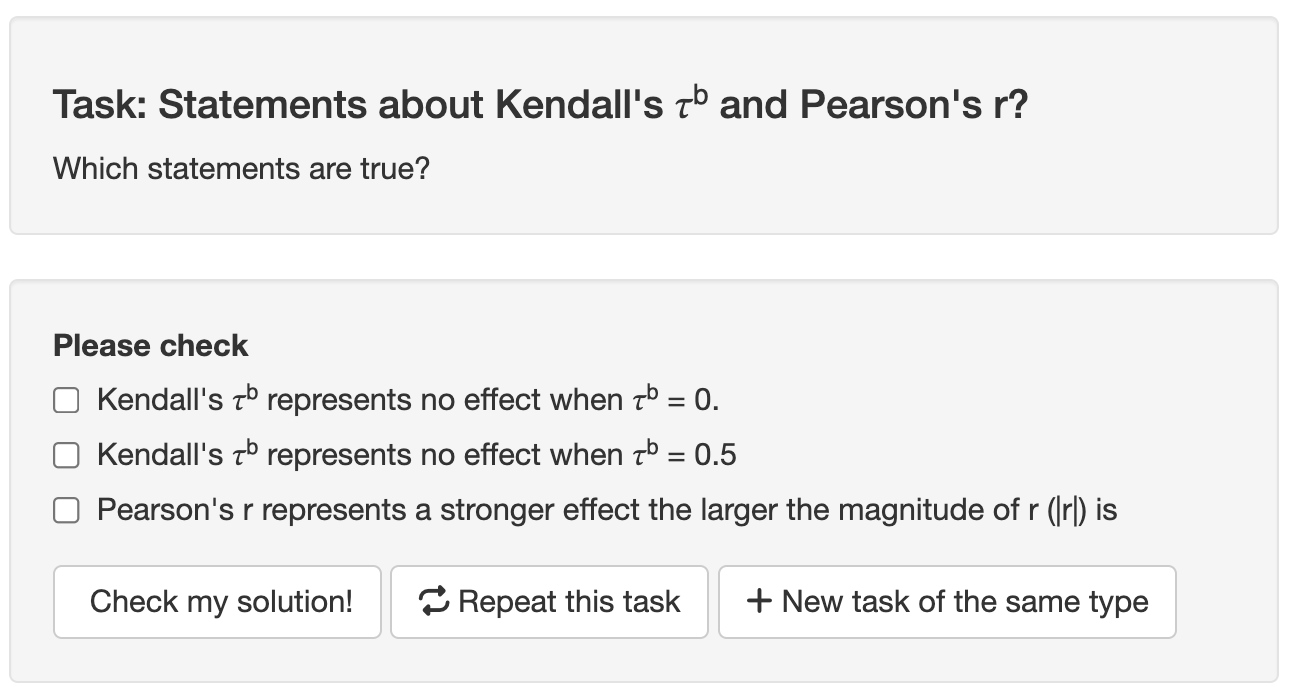

Judgments

Performance judgment (e.g., Schraw, 2009)

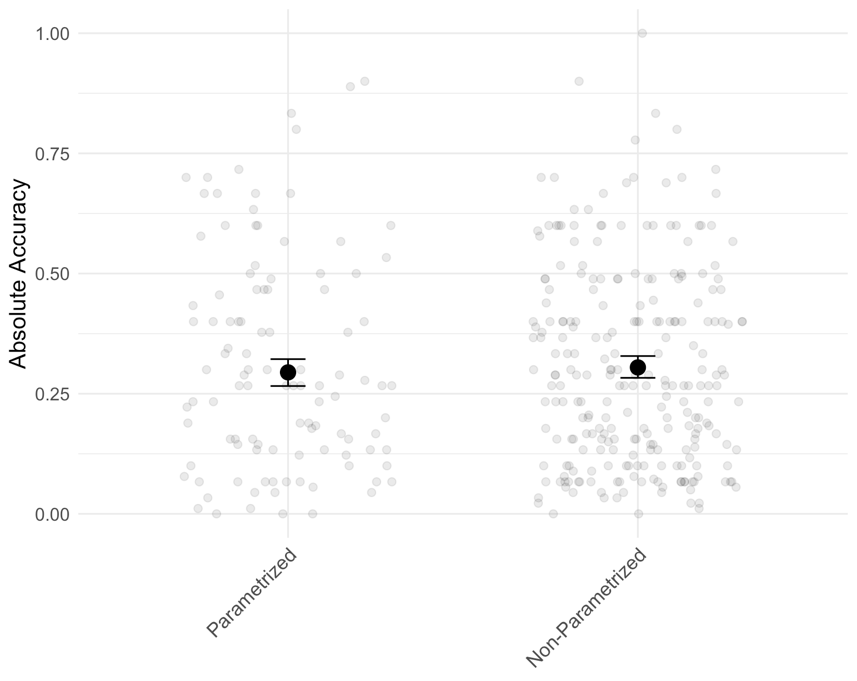

Judgment Accuracy

Absolute Accuracy (Maki et al., 2005)

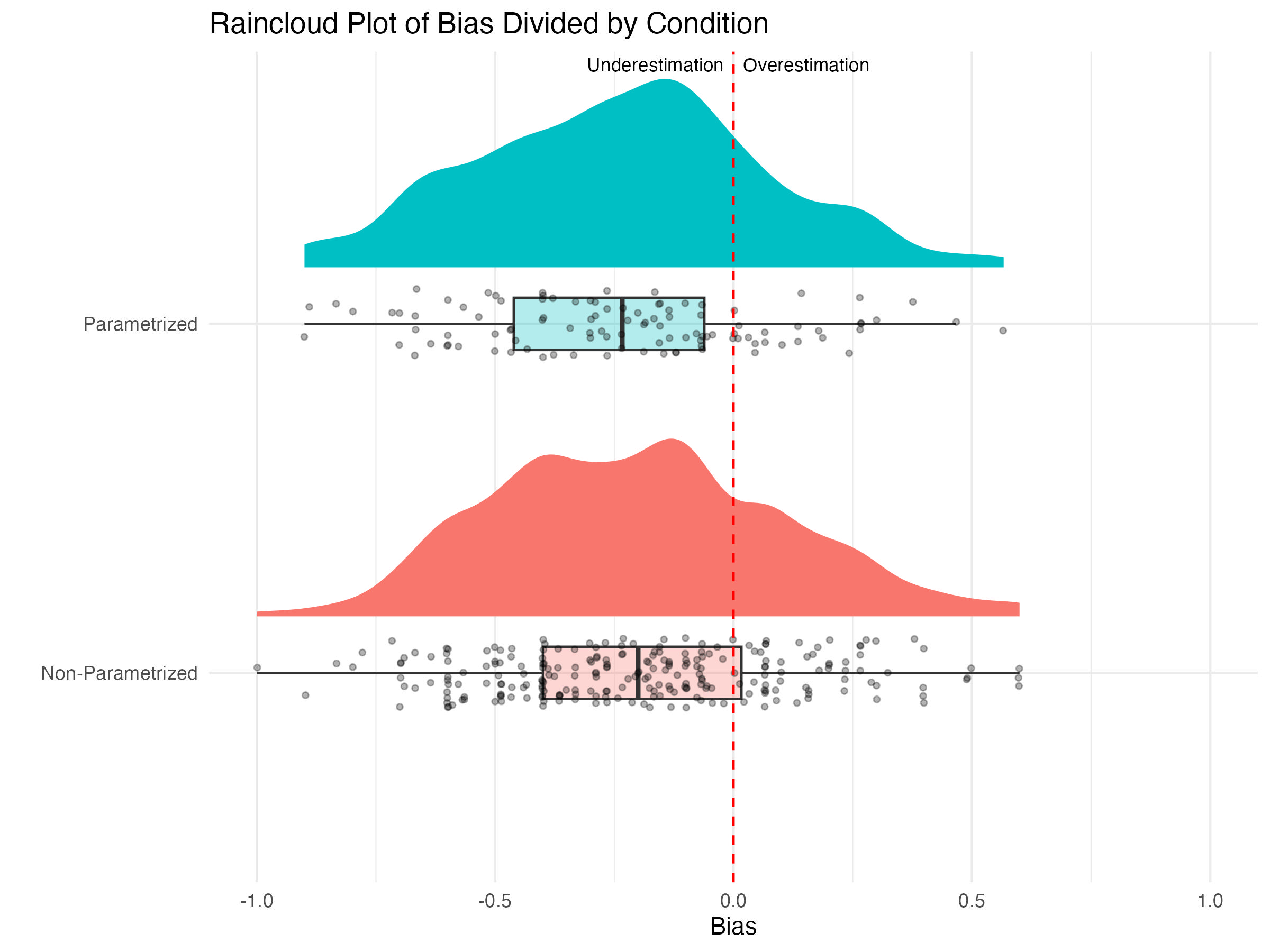

Bias (Schraw, 2009)

- Overestimation (e.g., Prinz et al., 2020)

- Underestimation (Koriat et al., 2002)

Hypothesis

Students’ judgments are more accurate – less overestimation or less underestimation – after working on parametrised tasks than after working on non-parametrised tasks

Method

Design

- experimental field study with pre-service teachers

- within-person design

Participants

- N = 174 pre-service teachers

- M_age = 21.5 years (SD = 3,26)

- 78,7% female

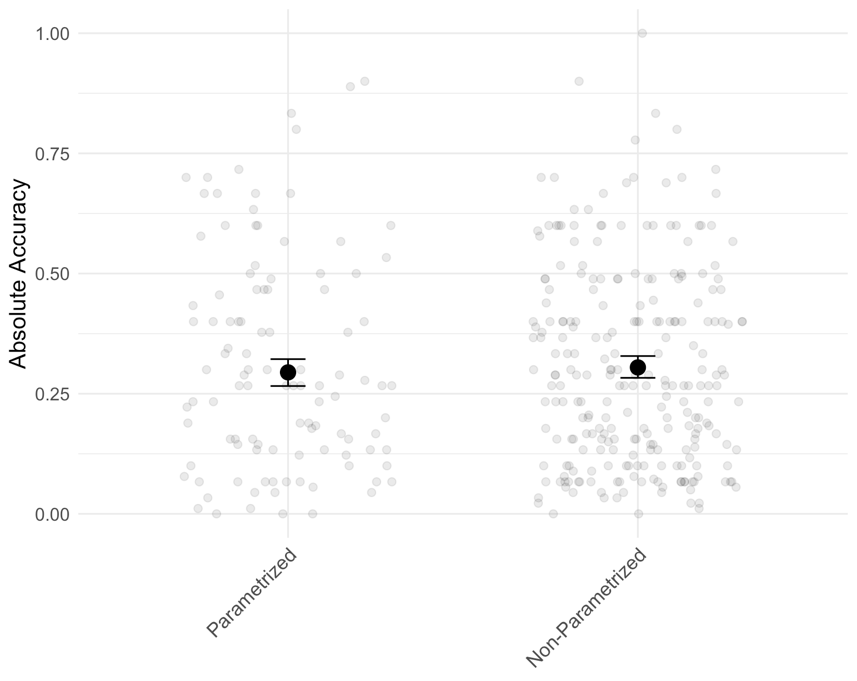

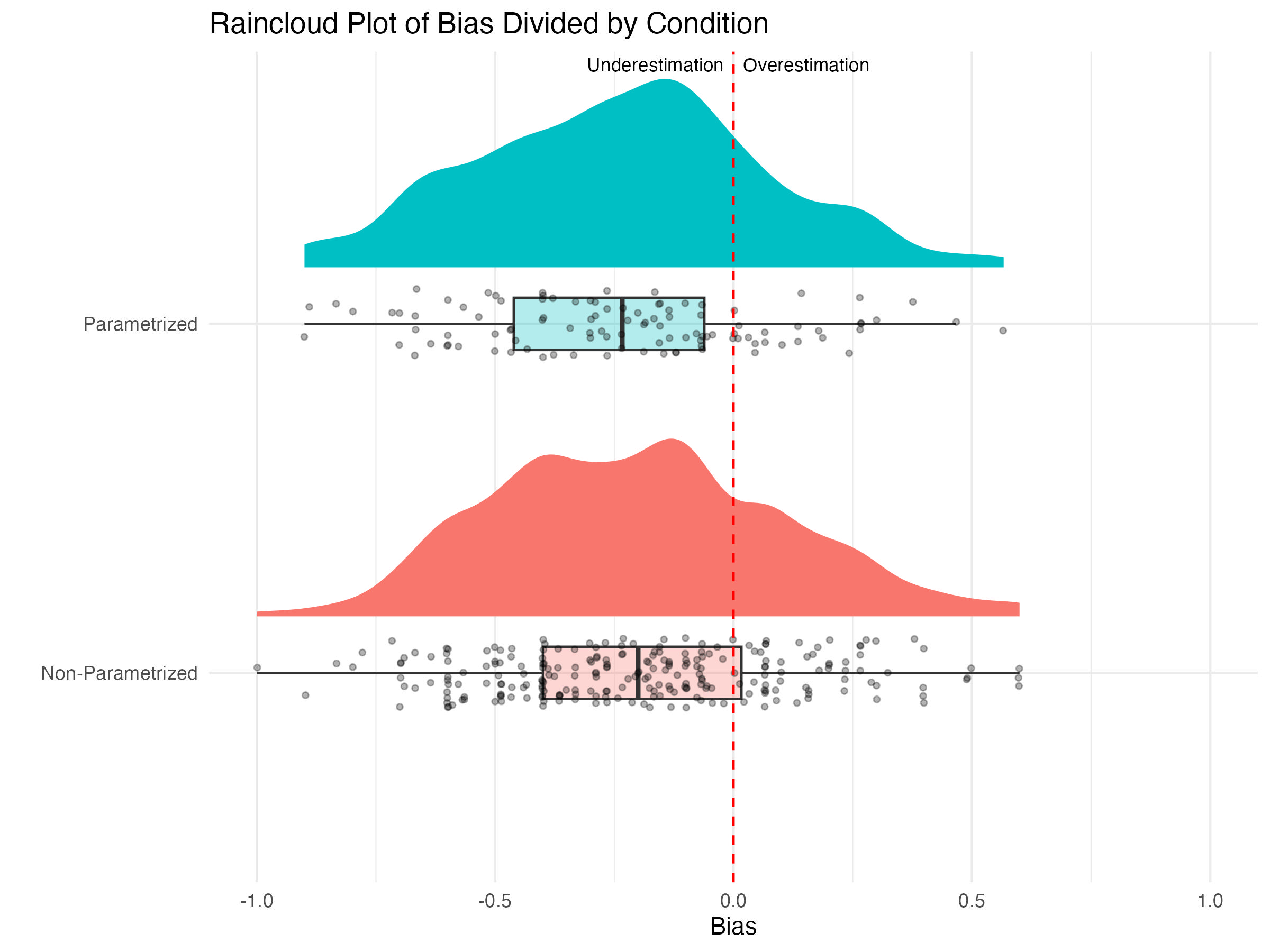

Results

Bayesian multilevel model (Bürkner, 2017)

Results

- The estimated effect of condition was small and uncertain (β = 0.01, 95% CI [−0.02, 0.04]).

no differences between the types of tasks

Exploratory analysis

Discussion

Why did we not find any differences?

- high (intrinsic) cognitive load for both types of tasks (Golke et al., 2022; Seufert, 2018, 2020)

- generalized judgment strategy (Hoch et al., 2023)

Underestimation

Pattern occurs less often than overestimation.

underconfidence-with-practice (UWP) effect (Koriat et al., 2002)

data driven interpretation of effort (Baars et al., 2020; David et al., 2024)

statistics anxiety (McIntee et al., 2022)

Implications

More research on the effects of parametrised tasks on learners’ judgments

- e.g. other domains, between subject designs, underlying cognitive processes

Focus on underestimation

- underresearched

Scan QR code for my slides

contact: Theresa Walesch (they/them) theresa.walesch@ph-karlsruhe.de

Summary findings

Theresa Walesch (they/them) - theresa.walesch@ph-karlsruhe.de

Appendix

Overestimation

(Self-assessment > Performance)

- occurs more frequently with novices or new topics (Golke et al., 2022)

- Risk: stopping learning too early

- Consequence: knowledge gaps -> poorer performance (Dunlosky & Rawson, 2012)

Underestimation

(Self-assessment < Performance)

may occur after some practice → “Underconfidence with practice effect” (Koriat et al., 2002)

Risk: ineffective regulation (Sarac & Tarhan, 2009)

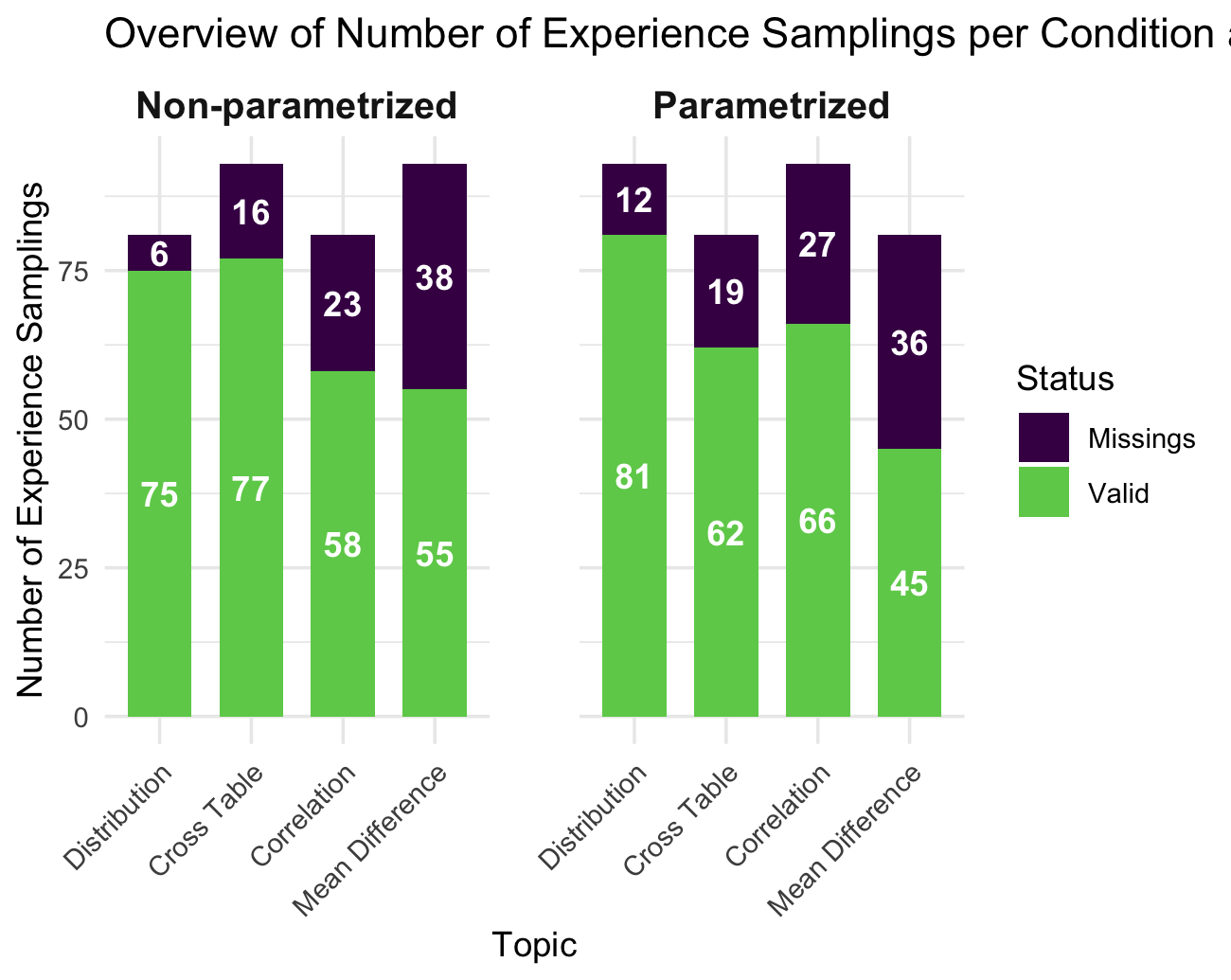

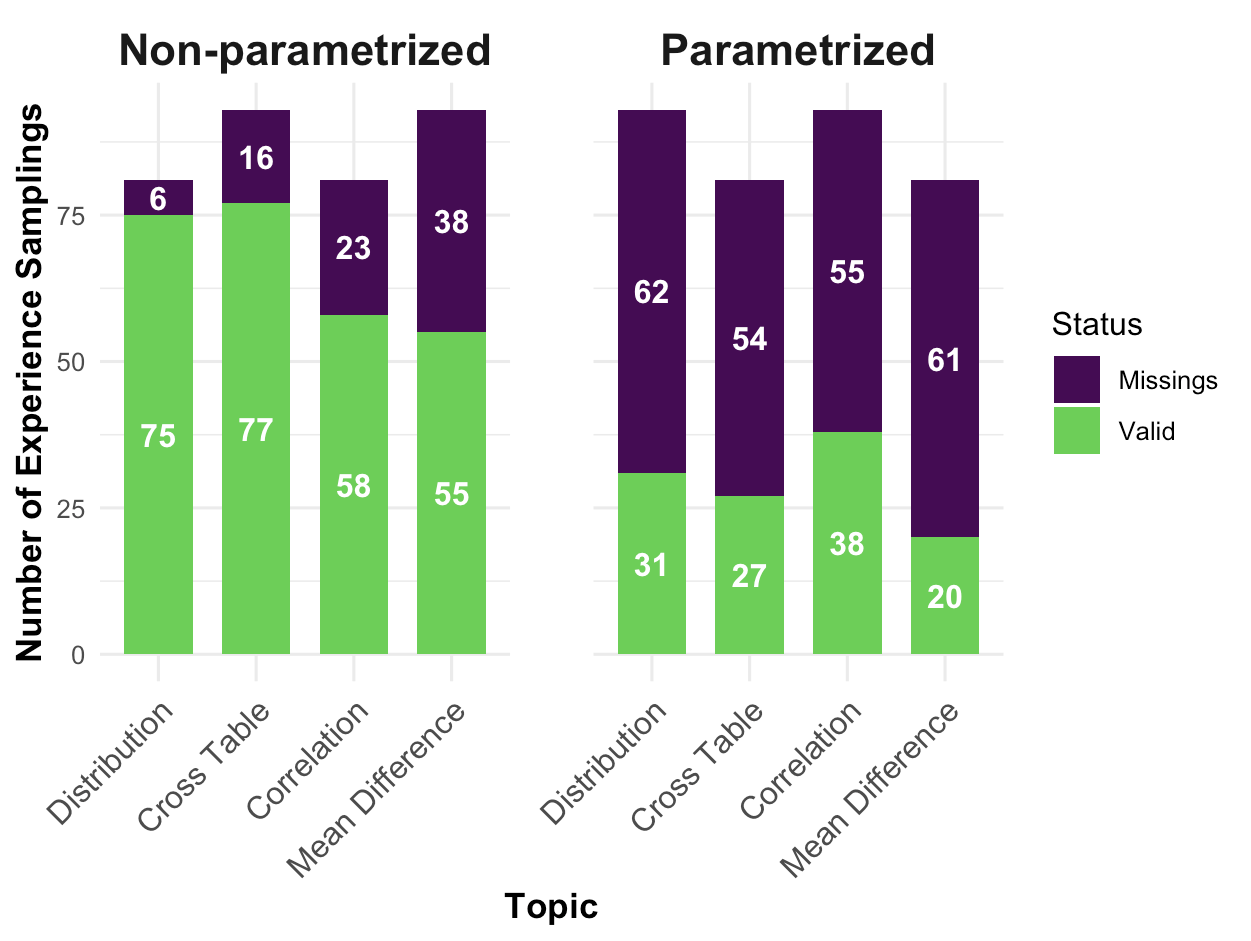

Missingness

Missingness mechanisms definitions

- MAR: missingness is depending on something observed (but not something unobserved) (Schafer & Graham, 2002)

- MCAR: missingness has no relationship (is independent of) both observed and unsobserved variables (Schafer & Graham, 2002)

- MNAR: missingness is related to the missing parts of the data (Graham, 2009)

Imputation during modelling

- mi() function of the brms package (Bürkner, 2024)

- one-step imputation

- specifies which variables are included

Literatur

EARLI 2025 - August 26th, 2025, Theresa Walesch